Building a Custom MTurk Platform for Research

As part of my thesis research, I developed a sophisticated system that seamlessly integrates Amazon Mechanical Turk (MTurk) with a custom-built survey platform featuring advanced data visualizations. This blog post details the technical implementation and design decisions behind this novel approach to academic research data collection.

The Challenge

Traditional survey platforms often fall short when conducting research that requires:

Complex interactive data visualizations

Real-time 3D scatter plots and clustering displays

Advanced mathematical notation and formulas

Seamless integration with crowdsourcing platforms

Robust data validation and quality control

My research required participants to interact with CBOW (Continuous Bag of Words) models and clustering visualizations, making it essential to build a custom solution that could handle these requirements while maintaining the scalability and participant management capabilities of MTurk.

MTurk Project Configuration

The system was successfully deployed across multiple MTurk batches with carefully configured parameters to ensure high-quality data collection:

Survey Configuration Details

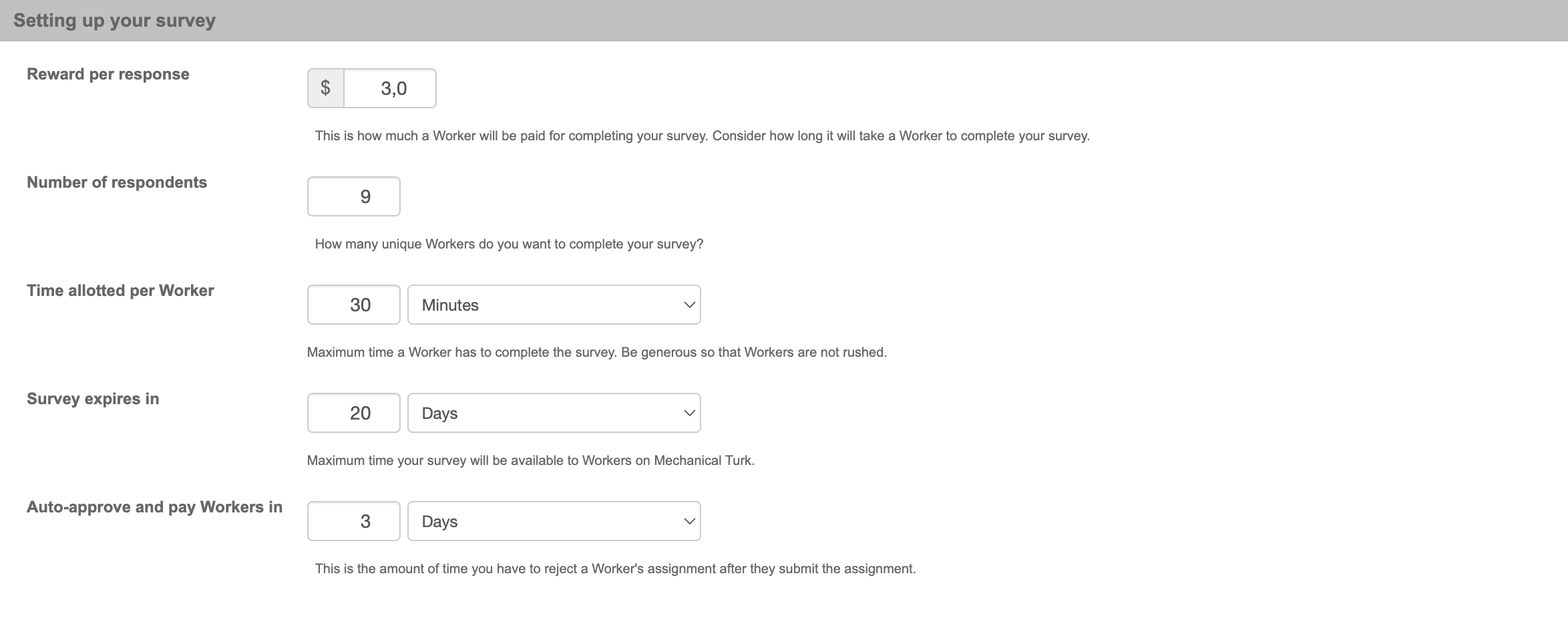

The MTurk project was configured with the following specifications:

Key Configuration Parameters:

Reward per response: $3.00 (competitive compensation for complex visualization tasks)

Number of respondents: 9 participants per batch

Time allotted per Worker: 30 minutes (generous time allowance for complex interactions)

Survey expires in: 20 days (ample opportunity for participant recruitment)

Auto-approve and pay Workers in: 3 days (quick payment processing)

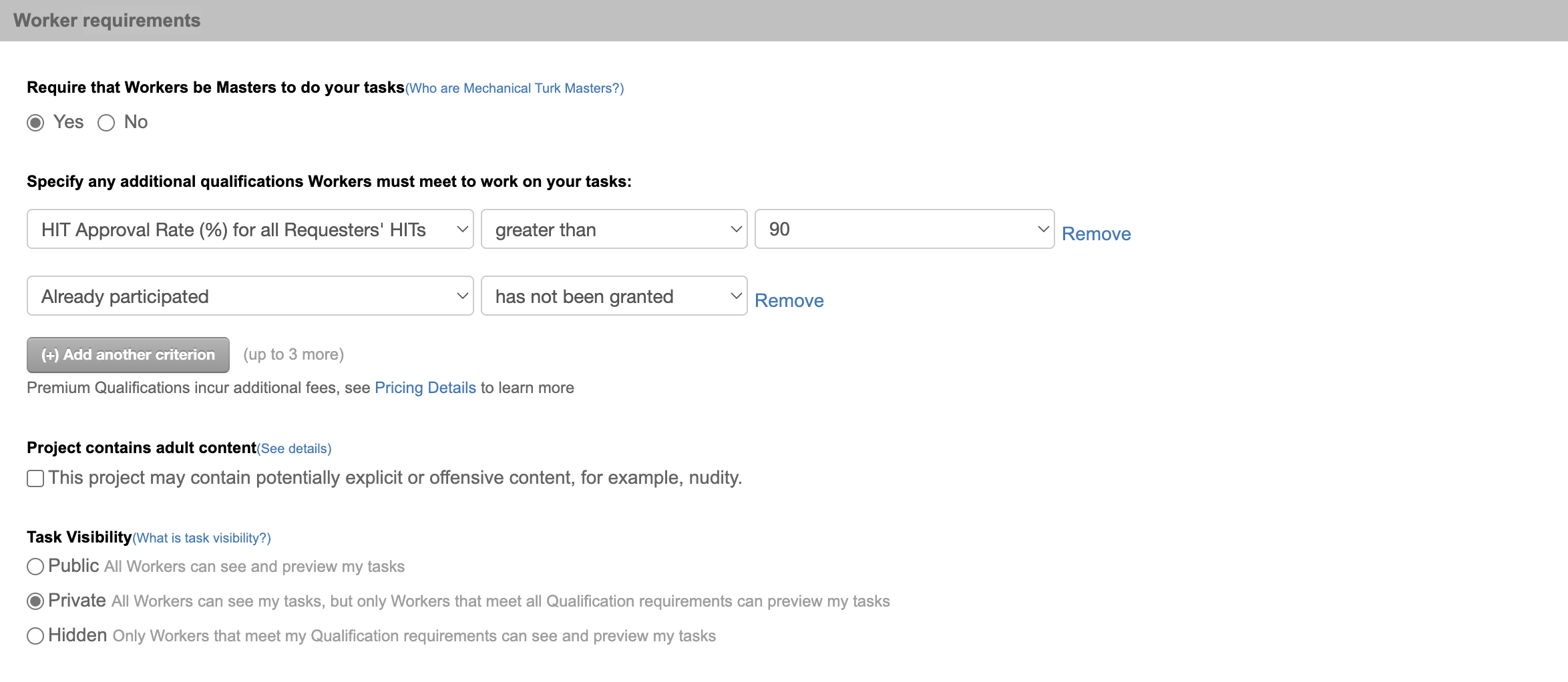

Worker Quality Requirements

To ensure high-quality responses, strict worker requirements were implemented:

Quality Control Measures:

MTurk Masters: Required (ensuring only high-performing workers)

HIT Approval Rate: >90% (filtering for reliable participants)

Previous participation: Blocked to prevent repeat responses

Task Visibility: Private (available only to qualified workers)

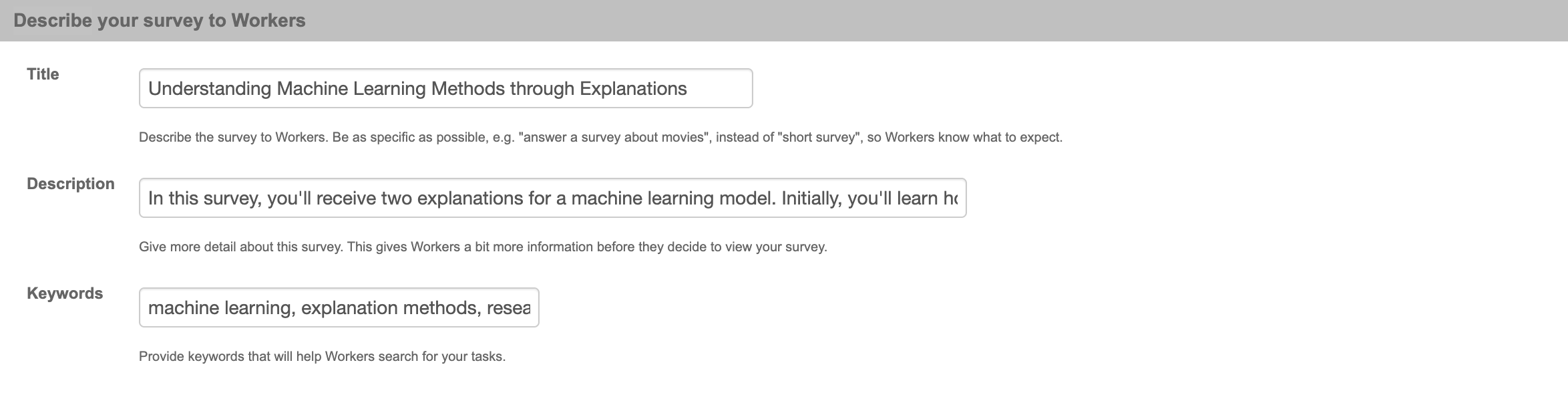

Study Description and Marketing

The study was presented to workers with clear, engaging descriptions:

Project Title: "Understanding Machine Learning Methods through Explanations"

Description: "In this survey, you'll receive two explanations for a machine learning model. Initially, you'll learn ho[w]..."

Keywords: "machine learning, explanation methods, research"

This approach successfully attracted participants interested in machine learning research, resulting in high engagement rates and quality responses.

Architecture Overview

The system consists of three main components:

Custom Survey Platform (

tobias-wetzel.com/thesis/survey/)MTurk Integration Layer (

cbow-lift.html)Advanced Visualization Engine (DeckGL + WebAssembly)

The MTurk Integration Layer

The core innovation lies in the seamless bridge between MTurk and the custom survey platform. The cbow-lift.html file serves as the MTurk HIT template that embeds the survey using iframe technology:

Cross-Frame Communication Protocol

One of the most critical aspects was establishing secure, real-time communication between the MTurk frame and the embedded survey. I implemented a robust postMessage API system:

Smart Validation System

The integration includes comprehensive validation to ensure data quality:

Completion Tracking: Monitors whether all survey slides are completed

Answer Validation: Ensures all required questions are answered

Real-time Feedback: Provides immediate user feedback for incomplete submissions

State Management: Maintains answer state across page interactions

The Survey Platform: Technical Deep Dive

Next.js Architecture

The survey platform is built on Next.js 14.1.0, providing:

Server-Side Rendering: Fast initial page loads

Dynamic Routing: Flexible survey deployment via

/survey/[url]/page.tsxStatic Generation: Pre-rendered survey pages for optimal performance

TypeScript Integration: Type safety throughout the application

Key Technical Innovations

1. Real-Time Answer Synchronization

Unlike traditional survey platforms, answers are transmitted to MTurk in real-time as participants complete each question, ensuring no data loss and enabling immediate validation.

2. Dynamic UI Adaptation

The iframe automatically adjusts its height based on content, providing a seamless user experience that feels native to MTurk rather than embedded.

3. Advanced Visualization Integration

The system successfully embeds complex DeckGL visualizations within MTurk HITs, opening new possibilities for research requiring interactive data exploration.

4. Robust Error Handling

Comprehensive error handling ensures participants receive clear feedback and researchers obtain high-quality data.

Deployment Results and Scale

The system was successfully deployed across multiple research studies with impressive results:

Batch Performance Overview

From the MTurk batches dashboard, we can see the successful deployment across multiple studies:

SHAP-PDP Series: Multiple batches (SHAP-PDP 4, 5, 6) with 100% completion rates

CBOW-Lift Studies: Successfully completed batches with 9/9 participants each

Surrogate Tree Studies: Multiple iterations with consistent completion rates

Total Studies: 10+ completed batches over several months (November 2024 - December 2024)

Lessons Learned

Technical Insights

Cross-frame security is paramount when integrating with MTurk

Real-time communication significantly improves user experience

WebAssembly is essential for computationally intensive visualizations

Progressive enhancement ensures compatibility across different devices

Research Methodology

Interactive elements can significantly improve data quality

Immediate validation reduces participant frustration

Visual feedback helps participants understand complex tasks

Mobile optimization is crucial for MTurk participant diversity

Future Enhancements

The system's modular architecture allows for future enhancements:

Advanced Analytics: Real-time participant behavior tracking

AI-Powered Quality Control: Automated detection of low-quality responses

Extended Visualization Library: Support for additional chart types and interactions

Conclusion

This custom MTurk integration demonstrates how modern web technologies can enhance academic research capabilities. By combining the crowdsourcing power of MTurk with advanced data visualization and real-time communication, researchers can conduct more sophisticated studies while maintaining data quality and participant engagement.

The system serves as a template for future research projects requiring complex interactive elements within crowdsourcing platforms, showing that it's possible to push the boundaries of what's achievable in online research studies.